I’ve been trying out a new technique to plug in knowledge and capabilities to AI apps like chat, using an emerging open standard from Anthropic called Model Context Protocol (MCP). It’s early days but it has momentum.

So I would say that right now is the time for organisations to jump in with some learning through building – let me unpack how I got to that conclusion, what I built, and what I learnt (also: how I can help).

This is a long one! At the bottom there are a couple screen grabs of a prototype so stick around…

Take a step back: the problem with AI chat

There are tons of possible AI applications (I mapped the landscape) but let’s zoom in on chat because you’ve almost certainly used ChatGPT or Claude.

You rapidly hit two walls with chat:

- Tools. You can get an AI write you a letter or summarise a doc in chat. But if you want it to: add up some numbers; book an Airbnb; or use an app on your intranet, then the AI needs to have access to extra tools.

- Knowledge. Large language models half-remember their training data and then hallucinate. So what you need is ground truth data sources: the ability for the AI to search your notes, or Wikipedia, or the customer service Notion, and then construct its answers out of facts in real-time. (It turns out that searching a data source is just a special form of tool.)

Tools! i.e. if AI chat is going to “cross the chasm,” how is it to be made extensible?

Ok, so the aim is that our chat apps will become extensible tool-using agents. Agents are just AIs that can choose for themselves what tools to use and keep running it a loop until they’re done. I talked before about agents and how agents will find tools to use (2024).

But, before tool discovery, who will build them?

There’s a bottleneck here: OpenAI and Anthropic and the rest can’t develop tools for every single database and every web service out there. We need an ecosystem, just as the ecosystem makes apps (not just Apple) and the ecosystem makes websites (not just AOL or - present day - Automattic).

So now we’ve got a multi-actor coordination problem.

Spoiler: this is where the open standard Model Context Protocol comes in, and that’s the topic of this post. But first… should you care? Yes.

A protocol sounds very technical. Why should I care?

Sure it’s handy to have a new technology enabler (lots of people adopting the same standard will make it cheaper to build a customer service bot, say) but actually MCP and similar protocols matter a great deal to every organisation that does business online.

Protocol adoption matters because of what users will do next.

So, a side quest into search and what it’s for…

Let’s jump ahead and imagine that Anthropic’s ambitions with MCP work out, and AI chat does get useful in a general purpose sense.

What happens? ChatGPT, or Claude, or Perplexity, or DeepSeek will displace Google as point of first intent for users. That’s a big habit to change I know. But Google is vulnerable.

Not because of anything that Google has done but because of search itself.

See, “search” has never just been “search.” Search has always been an epistemic journey. You’re building knowledge, you’re not in and out with one query. You google first with a vague intention, you learn some of the vocabulary. You google more, back and forth, and you pick up the terminology and trade-offs – whether you want a hotel or Airbnb, that kind of thing, or which dentist is on your bus route, or that your initial question wasn’t what you meant. Finally you know how to frame your real query, with well-known terms, you perform your actual transaction (book your hotel, write your report, after fighting past the pop-ups and the slop results), and you’re done.

Btw I did a talk about this at The Conference in 2022 (YouTube) and also wrote up some background on epistemic agents.

Search engines aren’t a great fit for epistemic journeys. It’s a messy process. But it’s what actually happens.

Enter chat.

Chat is a great fit for the “job to be done” of search.

I hit up Claude a couple dozen times a day, and in the last few weeks I have

- explored running in cold air and how to relieve stress on my lungs

- debugged a session cookie issue with a website I’m building

- got birthday present ideas for an X year old kid, from general to specific

- checked whether monkey puzzle trees preceded the Permian Triassic boundary (not quite)

- looked up data plane and control plane and where it breaks down

- got my timelines straight around the Hanoverian succession then rabbit holed into the emergence of modern parliamentary democracy

- designed a format for a family pool tournament.

I bet you’re the same.

The thing is, when I type ideas what to do with the kid this weekend in London. it’s raining

then how is your business present?

This isn’t just SEO. Google is upstream of almost every user journey and every customer interaction there is, and I think we underestimate how deeply the concept of “Google is the front door to the internet” is embedded into companies after 20 years of digital.

People’s promotions are based on whether their project to increase click-throughs on their specific page on the site hits its OKRs; teams are trained to write support articles that are findable by search engines and somebody had to deliver that training material; priorities for the next quarter are made based on site traffic analytics; the MVP of a new initiative concludes with “we shipped the new landing page”; you’re on a 10 year contract for a content management system that is built around web workflows. None of that will hold true any longer.

So organisations will be in a situation where not only are they not present and showing up in AI chat (so people never visit their parks or buy their bikes) but, for team and infrastructure reasons, they can’t adapt.

For many this will be an existential problem.

It’s not an impossible problem to wrap your arms around. It decomposes into practical challenges that, once revealed, can be tackled. Like: What’s the best way to store content to be made available to AI chat? How can user accounts be connected into ChatGPT or whatever? How do you measure success, or prioritise initiatives? How do you automate ongoing competitor awareness? And so on.

The difficulty is, well, how to reveal those questions?

The answer: you build and learn. Create prototypes, shake out the integration blockers. (Big things, yes, but also tiny tricky things, like possibly you shouldn’t have used HTML tags in your customer support knowledge base and now you need a clean-up project.)

Until now the barrier to entry for prototyping has been too high. It would be disproportionate to build a ChatGPT clone just to pathfind the future of your business.

But if there’s an ecosystem of protocols and pre-built technology… well… MCP means that prototyping is something you can do today.

And there is some urgency about this!

My best guess is we’ll see an acceleration of the search issue in about 6 months. I’ll come back to why below.

Now that’s all about chat vs search. But the difference with AI agents is that entire transactions can be completed in chat (which might be text or might be voice or something else). You wouldn’t book a holiday in a search engine. The technical consequences there run even deeper, and that’s the bet that Stripe is making with payments infrastructure for AI agents for example. But let’s start with the easy stuff.

What is Model Context Protocol and where does it fit in?

Recap: AI chat is already popular and, to get more popular, it needs plug-ins. Which means developers need to collaborate with AI chat app creators, which means everyone needs to agree on a standard.

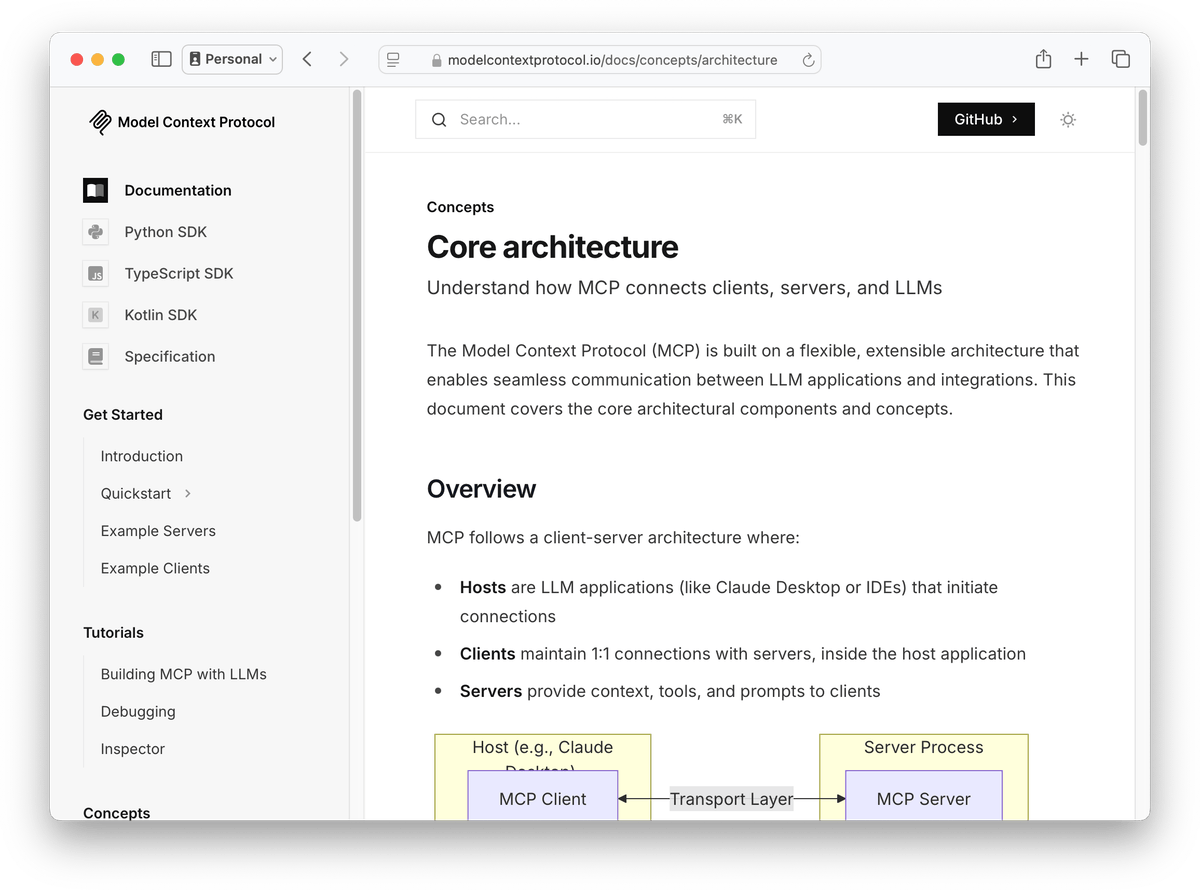

That’s where Model Context Protocol comes in. Proposed by Anthropic in December 2024:

MCP is an open protocol that standardizes how applications provide context to LLMs. Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

Here’s the MCP documentation.

A warning: it’s complicated. There are a ton of use cases to consider and so this standard is the opposite of simple. It’s a protocol with alternate transport layers, that level of complexity.

Look, proposed standards are ten a penny. Is this one in particular worth paying attention to? Yes.

How is MCP doing? Pretty well, it turns out

Here’s the top line: Anthropic is doing a good job! Yes the protocol is complicated. But it needs to be. Already people have used it to build plug-ins (called “servers”) for everything from searching your Google Drive to querying Snowflake BI databases to using Ticketmaster.

More than that, MCP is showing good traction:

You can judge the strength of a protocol on the diversity and activity of the community.

Check out the GitHub for modelcontextprotocol projects: it’s busy, and there are well-used SDKs for Typescript, Python and Kotlin.

A plug-in that makes tools and knowledge available to an AI app is called an “MCP server.” I mentioned providing access to services like Google Drive. You can also run an MCP server to connect to a business platform like HubSpot, or personally to control your smart home. There are already multiple directories where people are sharing ones they’ve built:

The servers are legit. This is some real activity.

An AI app that uses these servers is called a “client”. For a real standard you want many clients.

Here’s a matrix of existing clients. To highlight two:

- If you use Claude then Claude for Desktop will let you add MCP servers – it doesn’t yet work on the web.

- There’s also codename goose from block. If you want a developer-friendly experience to work with MCP servers directly, use this.

Project activity: big tick.

Although, another warning: it is early, “developer preview” days for MCP.

For example, you can’t even make a server for other people to connect to. You have to download it, make sure you have any technical requirements installed, run it on your own machine, etc, and it is fiddly. You will be editing JSON config files and digging through technical documentation.

Will it get easier? Yes. This year. To provide remote access to tools for AIs, first you need to figure out things like rock solid authentication. That’s a lot of work. So remote access is on the roadmap for the first half of 2025.

Pay attention to this project, is what I’m saying. There’s energy here and the direction is good.

Will MCP be the only game in town?

No.

Absolutely not. It’s too early for a single standard.

And like I said, MCP is necessarily complicated. The downside of being flexible, and being first, and doing things at Anthropic scale is it adds complexity, and that will be friction on developer adoption.

At the very least I would expect “enterprise” and “everything else” to coalesce on different standards. We’ll need something with the ease of Open Graph, if you know that from the SEO world.

Personally I’m watching Unternet. Their Web Applets spec covers overlapping ground but with the the simplicity and distributed nature of the web. (They’re partnered with Mozilla too.)

(A side note: I am loving this return to interop and protocol thinking. It’s healthy and vibrant. Early internet vibes.)

So I should skip MCP and wait for whatever’s next?

Also no.

MCP is the first time I can say: here’s a (relatively) easy way to connect your organisation’s tools and knowledge into an AI chat app and see what you learn.

Those lessons will be applicable whether we’re all building MCP servers in the future or not.

Because this is happening soon.

Remember I said that there are already 1,000s of MCP servers, and remote access is coming by mid 2025? There will suddenly be hosted MCP servers for all your favourite web apps, and Anthropic will make them available inside Claude on the web, and do you think OpenAI will sit still with ChatGPT, or even Google?

My guess is that we’ll see noticeable chunks being bitten out of traditional search by the end of this year.

(Sooner or later Apple will get in on the game because Apple Intelligence is architected for 3rd party tools too (2024) but I suspect that will take longer.)

Hey so I built my own MCP server and here’s what I learnt

I don’t want you to think that I have all these opinions without actually building something.

My testbed for “boring AI” (the best kind of AI) is always my unofficial BBC In Our Time archive Braggoscope. (In December I added a visual explorer using embeddings.)

There’s a back catalogue of over 1,000 podcast episodes on everything from the Greek myths to the evolution of teeth.

So I wanted to use AI chat to find what episode to listen to next.

Now, I am all-in on Cloudflare for 2025 so I used Cloudflare Workers and a new Cloudflare-provided library called workers-mcp to spin up an MCP server to search Braggoscope. Here’s Cloudflare’s guide on building an MCP server on their platform.

I already have all the episode descriptions in a vector database for semantic search so I was able to re-use that.

This server runs remotely, so I’m prepared for that when that eventually comes, but today it still needs a local gateway.

i.e. sorry, you can’t use this yet. But I can show you a screen grab.

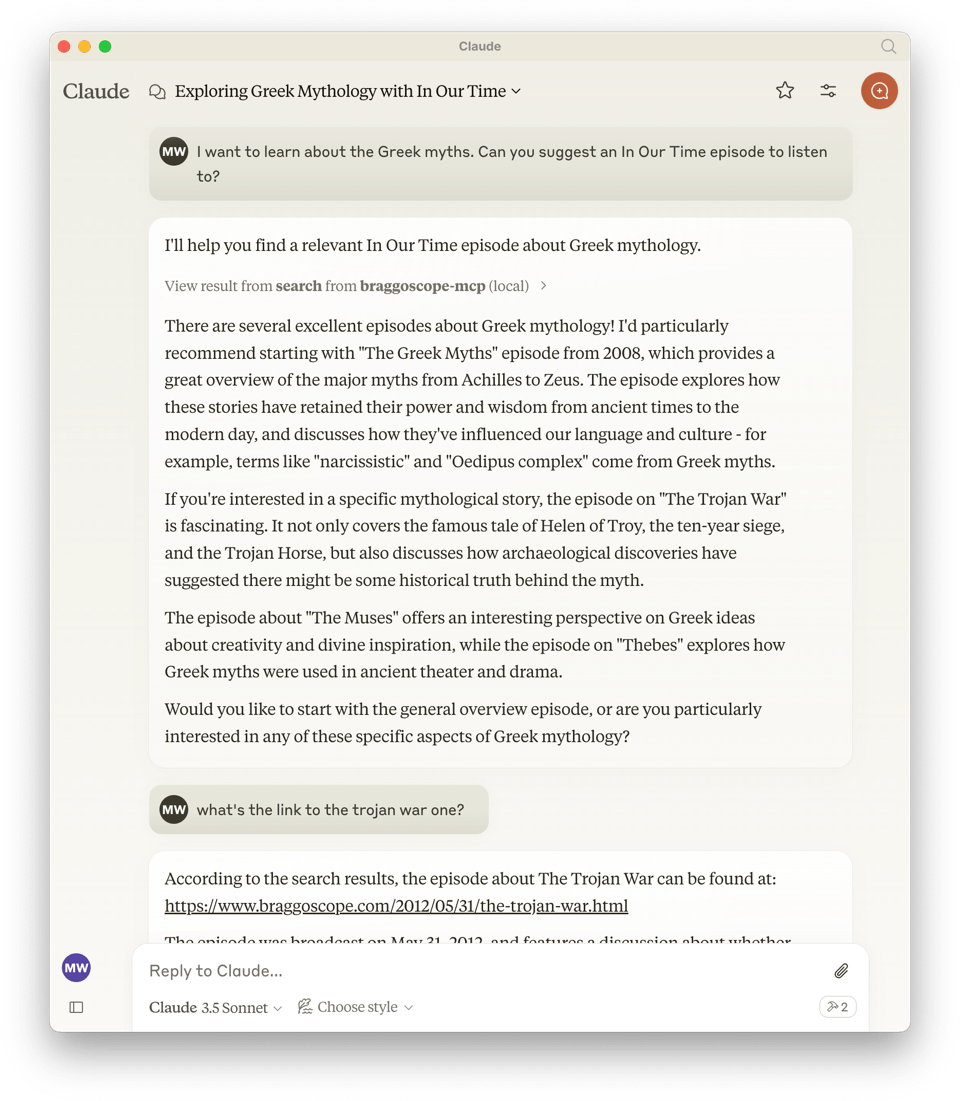

Here’s what it’s like to use in Claude.

What’s interesting is that Claude treats my Braggoscope MCP server a bit like side-loading knowledge, its own Neo in the Matrix I know kung fu

moment. There was nothing in the data source that said “this is excellent” – the top episode was just a good match for the search term, and it confabulated from there.

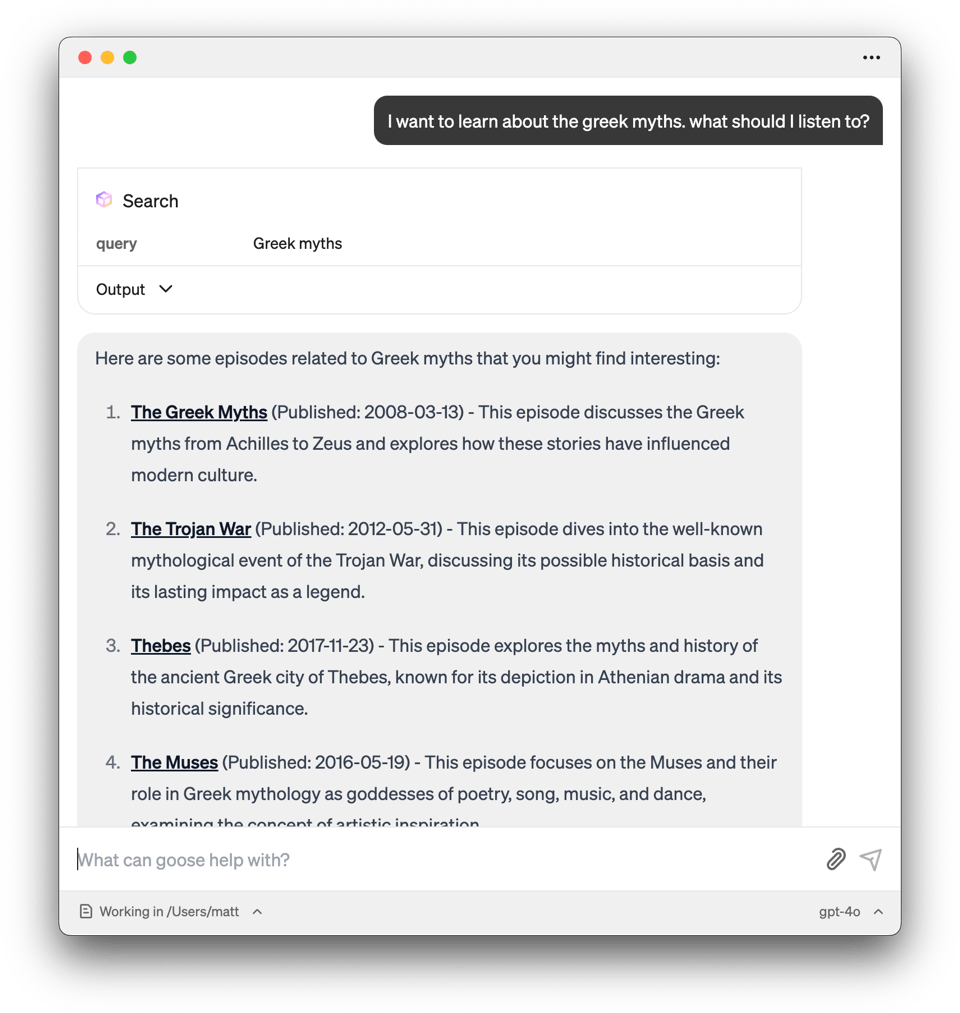

Although MCP is an Anthropic-initiated project, Claude is not the only client. I mentioned goose above and here it is.

Goose is more technical - you have to paste in your own LLM API key - and a great workbench for experimenting with MCP servers.

Here I’m using OpenAI’s gpt-4o model without a Claude/ChatGPT system prompt. It imposes less character; the list of episodes is more like a search results page. I can still chat with GPT using the episode description as a springboard so the epistemic journey is still there. But the initial vibe is very different.

What did I learn?

From a practical tech point of view, I’m going to have to find ways to keep the Braggoscope database of episodes in sync with the semantic search database.

More than that, I can now provide faceted search with a level of complexity that I would never, ever put in a web UI: as a user I want to be able to say uh there was an episode maybe 2014, around then, and Edith Hall was a guest, what could that be?

A human wouldn’t have the patience to fill out the search form. An AI does. I have a new tool to build!

From an experience/brand point of view, I would prefer that Claude didn’t “speak” for Braggoscope. So I need to find a way to steer its voice.

If I were the BBC, with a huge availability of programmes and podcasts, I would need to find a way to connect to user accounts: episode search really needs to be personalised – the AI should remember what shows I’ve listened to before. It needs signals for ranking.

Additionally I want to way to add a little serendipity. Maybe the “search” tool should also provide something radically different as a kicker at the end of the results set. This is the same as the way that a broadcaster will advertise a very different show at the end of a hugely popular one. Sharing the audience is important for them! And also important for me – even in this simple integration, as a user I felt like I needed more variety.

Anyway, it goes to show that this isn’t an entirely technical exercise.

Let’s build AI chat plug-ins together

With Model Context Protocol, your takeaway should be that this is the first time you can (relatively) easily prototype using real systems and discover and iterate on

- what it’s going to be like for the user

- technical implications

- organisational implications

…all at the same time.

As an organisation, you should build simultaneously with both MCP and another spec like Web Applets to avoid being skewed too much by a single implementation. Use multiple compatible AI chat clients to do some informal user testing.

I wouldn’t call this prototyping. The prototype is in service of a pathfinding exercise. You’d follow up with a share-out and possible implications session, that kind of thing.

Anyway.

I want to build some knowledge here, and that means getting my hands dirty. I’d like to see real issues inside a handful of partners and figure out the commonalities.

So if you want to build prototypes using MCP, I can help.

Let’s see if there’s demand: this can be a project package via my micro studio Acts Not Facts because there are a few things it should involve. We’ll figure out some easy time + materials way to work together. Get in touch.

I’ve been trying out a new technique to plug in knowledge and capabilities to AI apps like chat, using an emerging open standard from Anthropic called Model Context Protocol (MCP). It’s early days but it has momentum.

So I would say that right now is the time for organisations to jump in with some learning through building – let me unpack how I got to that conclusion, what I built, and what I learnt (also: how I can help).

This is a long one! At the bottom there are a couple screen grabs of a prototype so stick around…

Take a step back: the problem with AI chat

There are tons of possible AI applications (I mapped the landscape) but let’s zoom in on chat because you’ve almost certainly used ChatGPT or Claude.

You rapidly hit two walls with chat:

Tools! i.e. if AI chat is going to “cross the chasm,” how is it to be made extensible?

Ok, so the aim is that our chat apps will become extensible tool-using agents. Agents are just AIs that can choose for themselves what tools to use and keep running it a loop until they’re done. I talked before about agents and how agents will find tools to use (2024).

But, before tool discovery, who will build them?

There’s a bottleneck here: OpenAI and Anthropic and the rest can’t develop tools for every single database and every web service out there. We need an ecosystem, just as the ecosystem makes apps (not just Apple) and the ecosystem makes websites (not just AOL or - present day - Automattic).

So now we’ve got a multi-actor coordination problem.

Spoiler: this is where the open standard Model Context Protocol comes in, and that’s the topic of this post. But first… should you care? Yes.

A protocol sounds very technical. Why should I care?

Sure it’s handy to have a new technology enabler (lots of people adopting the same standard will make it cheaper to build a customer service bot, say) but actually MCP and similar protocols matter a great deal to every organisation that does business online.

Protocol adoption matters because of what users will do next.

So, a side quest into search and what it’s for…

Let’s jump ahead and imagine that Anthropic’s ambitions with MCP work out, and AI chat does get useful in a general purpose sense.

What happens? ChatGPT, or Claude, or Perplexity, or DeepSeek will displace Google as point of first intent for users. That’s a big habit to change I know. But Google is vulnerable.

Not because of anything that Google has done but because of search itself.

See, “search” has never just been “search.” Search has always been an epistemic journey. You’re building knowledge, you’re not in and out with one query. You google first with a vague intention, you learn some of the vocabulary. You google more, back and forth, and you pick up the terminology and trade-offs – whether you want a hotel or Airbnb, that kind of thing, or which dentist is on your bus route, or that your initial question wasn’t what you meant. Finally you know how to frame your real query, with well-known terms, you perform your actual transaction (book your hotel, write your report, after fighting past the pop-ups and the slop results), and you’re done.

Btw I did a talk about this at The Conference in 2022 (YouTube) and also wrote up some background on epistemic agents.

Search engines aren’t a great fit for epistemic journeys. It’s a messy process. But it’s what actually happens.

Enter chat.

Chat is a great fit for the “job to be done” of search.

I hit up Claude a couple dozen times a day, and in the last few weeks I have

I bet you’re the same.

The thing is, when I type then how is your business present?

This isn’t just SEO. Google is upstream of almost every user journey and every customer interaction there is, and I think we underestimate how deeply the concept of “Google is the front door to the internet” is embedded into companies after 20 years of digital.

People’s promotions are based on whether their project to increase click-throughs on their specific page on the site hits its OKRs; teams are trained to write support articles that are findable by search engines and somebody had to deliver that training material; priorities for the next quarter are made based on site traffic analytics; the MVP of a new initiative concludes with “we shipped the new landing page”; you’re on a 10 year contract for a content management system that is built around web workflows. None of that will hold true any longer.

So organisations will be in a situation where not only are they not present and showing up in AI chat (so people never visit their parks or buy their bikes) but, for team and infrastructure reasons, they can’t adapt.

For many this will be an existential problem.

It’s not an impossible problem to wrap your arms around. It decomposes into practical challenges that, once revealed, can be tackled. Like: What’s the best way to store content to be made available to AI chat? How can user accounts be connected into ChatGPT or whatever? How do you measure success, or prioritise initiatives? How do you automate ongoing competitor awareness? And so on.

The difficulty is, well, how to reveal those questions?

The answer: you build and learn. Create prototypes, shake out the integration blockers. (Big things, yes, but also tiny tricky things, like possibly you shouldn’t have used HTML tags in your customer support knowledge base and now you need a clean-up project.)

Until now the barrier to entry for prototyping has been too high. It would be disproportionate to build a ChatGPT clone just to pathfind the future of your business.

But if there’s an ecosystem of protocols and pre-built technology… well… MCP means that prototyping is something you can do today.

And there is some urgency about this!

My best guess is we’ll see an acceleration of the search issue in about 6 months. I’ll come back to why below.

Now that’s all about chat vs search. But the difference with AI agents is that entire transactions can be completed in chat (which might be text or might be voice or something else). You wouldn’t book a holiday in a search engine. The technical consequences there run even deeper, and that’s the bet that Stripe is making with payments infrastructure for AI agents for example. But let’s start with the easy stuff.

What is Model Context Protocol and where does it fit in?

Recap: AI chat is already popular and, to get more popular, it needs plug-ins. Which means developers need to collaborate with AI chat app creators, which means everyone needs to agree on a standard.

That’s where Model Context Protocol comes in. Proposed by Anthropic in December 2024:

Here’s the MCP documentation.

A warning: it’s complicated. There are a ton of use cases to consider and so this standard is the opposite of simple. It’s a protocol with alternate transport layers, that level of complexity.

Look, proposed standards are ten a penny. Is this one in particular worth paying attention to? Yes.

How is MCP doing? Pretty well, it turns out

Here’s the top line: Anthropic is doing a good job! Yes the protocol is complicated. But it needs to be. Already people have used it to build plug-ins (called “servers”) for everything from searching your Google Drive to querying Snowflake BI databases to using Ticketmaster.

More than that, MCP is showing good traction:

You can judge the strength of a protocol on the diversity and activity of the community.

Check out the GitHub for modelcontextprotocol projects: it’s busy, and there are well-used SDKs for Typescript, Python and Kotlin.

A plug-in that makes tools and knowledge available to an AI app is called an “MCP server.” I mentioned providing access to services like Google Drive. You can also run an MCP server to connect to a business platform like HubSpot, or personally to control your smart home. There are already multiple directories where people are sharing ones they’ve built:

The servers are legit. This is some real activity.

An AI app that uses these servers is called a “client”. For a real standard you want many clients.

Here’s a matrix of existing clients. To highlight two:

Project activity: big tick.

Although, another warning: it is early, “developer preview” days for MCP.

For example, you can’t even make a server for other people to connect to. You have to download it, make sure you have any technical requirements installed, run it on your own machine, etc, and it is fiddly. You will be editing JSON config files and digging through technical documentation.

Will it get easier? Yes. This year. To provide remote access to tools for AIs, first you need to figure out things like rock solid authentication. That’s a lot of work. So remote access is on the roadmap for the first half of 2025.

Pay attention to this project, is what I’m saying. There’s energy here and the direction is good.

Will MCP be the only game in town?

No.

Absolutely not. It’s too early for a single standard.

And like I said, MCP is necessarily complicated. The downside of being flexible, and being first, and doing things at Anthropic scale is it adds complexity, and that will be friction on developer adoption.

At the very least I would expect “enterprise” and “everything else” to coalesce on different standards. We’ll need something with the ease of Open Graph, if you know that from the SEO world.

Personally I’m watching Unternet. Their Web Applets spec covers overlapping ground but with the the simplicity and distributed nature of the web. (They’re partnered with Mozilla too.)

(A side note: I am loving this return to interop and protocol thinking. It’s healthy and vibrant. Early internet vibes.)

So I should skip MCP and wait for whatever’s next?

Also no.

MCP is the first time I can say: here’s a (relatively) easy way to connect your organisation’s tools and knowledge into an AI chat app and see what you learn.

Those lessons will be applicable whether we’re all building MCP servers in the future or not.

Because this is happening soon.

Remember I said that there are already 1,000s of MCP servers, and remote access is coming by mid 2025? There will suddenly be hosted MCP servers for all your favourite web apps, and Anthropic will make them available inside Claude on the web, and do you think OpenAI will sit still with ChatGPT, or even Google?

My guess is that we’ll see noticeable chunks being bitten out of traditional search by the end of this year.

(Sooner or later Apple will get in on the game because Apple Intelligence is architected for 3rd party tools too (2024) but I suspect that will take longer.)

Hey so I built my own MCP server and here’s what I learnt

I don’t want you to think that I have all these opinions without actually building something.

My testbed for “boring AI” (the best kind of AI) is always my unofficial BBC In Our Time archive Braggoscope. (In December I added a visual explorer using embeddings.)

There’s a back catalogue of over 1,000 podcast episodes on everything from the Greek myths to the evolution of teeth.

So I wanted to use AI chat to find what episode to listen to next.

Now, I am all-in on Cloudflare for 2025 so I used Cloudflare Workers and a new Cloudflare-provided library called workers-mcp to spin up an MCP server to search Braggoscope. Here’s Cloudflare’s guide on building an MCP server on their platform.

I already have all the episode descriptions in a vector database for semantic search so I was able to re-use that.

This server runs remotely, so I’m prepared for that when that eventually comes, but today it still needs a local gateway.

i.e. sorry, you can’t use this yet. But I can show you a screen grab.

Here’s what it’s like to use in Claude.

What’s interesting is that Claude treats my Braggoscope MCP server a bit like side-loading knowledge, its own Neo in the Matrix moment. There was nothing in the data source that said “this is excellent” – the top episode was just a good match for the search term, and it confabulated from there.

Although MCP is an Anthropic-initiated project, Claude is not the only client. I mentioned goose above and here it is.

Goose is more technical - you have to paste in your own LLM API key - and a great workbench for experimenting with MCP servers.

Here I’m using OpenAI’s gpt-4o model without a Claude/ChatGPT system prompt. It imposes less character; the list of episodes is more like a search results page. I can still chat with GPT using the episode description as a springboard so the epistemic journey is still there. But the initial vibe is very different.

What did I learn?

From a practical tech point of view, I’m going to have to find ways to keep the Braggoscope database of episodes in sync with the semantic search database.

More than that, I can now provide faceted search with a level of complexity that I would never, ever put in a web UI: as a user I want to be able to say A human wouldn’t have the patience to fill out the search form. An AI does. I have a new tool to build!

From an experience/brand point of view, I would prefer that Claude didn’t “speak” for Braggoscope. So I need to find a way to steer its voice.

If I were the BBC, with a huge availability of programmes and podcasts, I would need to find a way to connect to user accounts: episode search really needs to be personalised – the AI should remember what shows I’ve listened to before. It needs signals for ranking.

Additionally I want to way to add a little serendipity. Maybe the “search” tool should also provide something radically different as a kicker at the end of the results set. This is the same as the way that a broadcaster will advertise a very different show at the end of a hugely popular one. Sharing the audience is important for them! And also important for me – even in this simple integration, as a user I felt like I needed more variety.

Anyway, it goes to show that this isn’t an entirely technical exercise.

Let’s build AI chat plug-ins together

With Model Context Protocol, your takeaway should be that this is the first time you can (relatively) easily prototype using real systems and discover and iterate on

…all at the same time.

As an organisation, you should build simultaneously with both MCP and another spec like Web Applets to avoid being skewed too much by a single implementation. Use multiple compatible AI chat clients to do some informal user testing.

I wouldn’t call this prototyping. The prototype is in service of a pathfinding exercise. You’d follow up with a share-out and possible implications session, that kind of thing.

Anyway.

I want to build some knowledge here, and that means getting my hands dirty. I’d like to see real issues inside a handful of partners and figure out the commonalities.

So if you want to build prototypes using MCP, I can help.

Let’s see if there’s demand: this can be a project package via my micro studio Acts Not Facts because there are a few things it should involve. We’ll figure out some easy time + materials way to work together. Get in touch.